Introduction to Transfer Learning

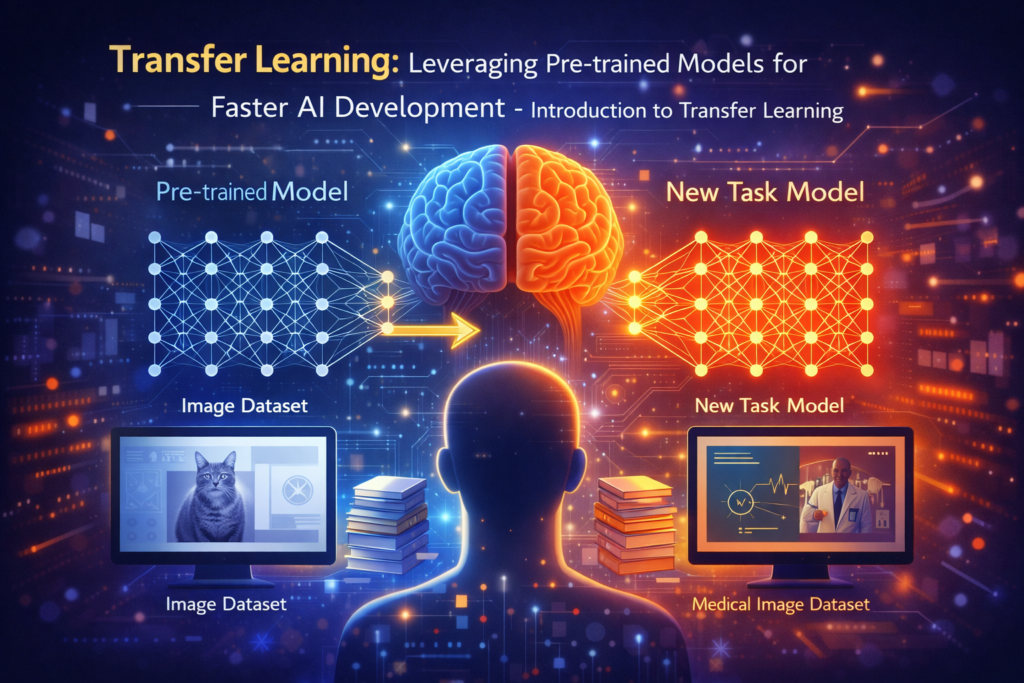

Transfer learning has revolutionized the field of artificial intelligence by enabling developers to leverage knowledge gained from solving one problem and applying it to a different but related problem. Instead of training models from scratch, which requires massive datasets and computational resources, transfer learning allows us to build on pre-trained models, dramatically reducing development time and improving results.

Understanding the Fundamentals

What is Transfer Learning?

Transfer learning is a machine learning technique where a model developed for one task is reused as the starting point for a model on a second task. The core idea is that features learned by a neural network on a large dataset can be useful for other tasks, even if those tasks are different from the original training objective.

Why Transfer Learning Works

Deep neural networks learn hierarchical representations of data. Early layers typically learn low-level features like edges, textures, and shapes that are universal across many tasks. Later layers learn more task-specific features. By transferring the weights from early and middle layers, we can leverage this general knowledge while fine-tuning later layers for our specific task.

Types of Transfer Learning

There are several approaches to transfer learning: feature extraction (using a pre-trained model as a fixed feature extractor), fine-tuning (unfreezing some layers and training them on new data), and domain adaptation (adapting a model from one domain to another). Each approach has its advantages depending on the similarity between source and target tasks and the amount of available data.

Pre-trained Models in Practice

Image Classification Models

For computer vision tasks, models pre-trained on ImageNet have become the standard starting point. VGG, ResNet, EfficientNet, and Vision Transformers have learned rich visual representations from millions of images. These models can be adapted for medical imaging, satellite imagery analysis, manufacturing defect detection, and countless other applications.

Natural Language Processing Models

The NLP field has been transformed by large language models like BERT, GPT, and their successors. These models, pre-trained on massive text corpora, understand language structure, semantics, and context. They can be fine-tuned for sentiment analysis, question answering, named entity recognition, and other language tasks with relatively small domain-specific datasets.

Audio and Speech Models

Pre-trained audio models like Wav2Vec and Whisper have learned to understand speech patterns and acoustic features. These can be transferred to speech recognition, speaker identification, emotion detection, and music classification tasks.

Implementing Transfer Learning

Choosing the Right Pre-trained Model

Select a model based on the similarity between the source and target domains. If working on medical images, a model pre-trained on general images may still be useful, but one pre-trained on medical data would be preferable. Consider model size, inference speed requirements, and the framework you are using (TensorFlow, PyTorch, etc.).

Feature Extraction Approach

In this approach, you freeze all layers of the pre-trained model and only train a new classifier head. This works well when you have limited data or when the source and target tasks are very similar. The pre-trained layers act as a fixed feature extractor, converting input data into meaningful representations.

Fine-tuning Strategy

Fine-tuning involves unfreezing some or all layers of the pre-trained model and training them with a low learning rate. Start by unfreezing only the top layers and gradually unfreeze more if needed. Use a lower learning rate for pre-trained layers to preserve learned features while allowing adaptation to the new task.

Progressive Fine-tuning

A sophisticated approach is to progressively unfreeze layers during training. Start with only the classification head, train for a few epochs, then unfreeze the top convolutional block, train again, and continue this process. This gradual approach helps prevent catastrophic forgetting of pre-trained knowledge.

Best Practices and Techniques

Data Augmentation

When fine-tuning with limited data, aggressive data augmentation becomes crucial. For images, use rotations, flips, color adjustments, and advanced techniques like CutOut or MixUp. For text, consider back-translation, synonym replacement, or contextual augmentation. Augmentation helps prevent overfitting and improves generalization.

Learning Rate Scheduling

Use differential learning rates where pre-trained layers have lower learning rates than new layers. Implement learning rate warmup to gradually increase the learning rate at the start of training. Cosine annealing or reduce-on-plateau schedulers help find optimal weights during fine-tuning.

Regularization Techniques

Apply dropout in new layers, use weight decay, and consider early stopping based on validation loss. These techniques prevent overfitting, especially important when fine-tuning large models on small datasets. Label smoothing can also improve generalization in classification tasks.

Handling Domain Shift

When source and target domains differ significantly, consider domain adaptation techniques. Adversarial training can help align feature distributions between domains. Alternatively, use intermediate pre-training on an unlabeled dataset from the target domain before fine-tuning on labeled data.

Popular Frameworks and Tools

Hugging Face Transformers

The Hugging Face library provides easy access to thousands of pre-trained models for NLP, vision, and audio tasks. With just a few lines of code, you can download a pre-trained model, add a task-specific head, and fine-tune on your data. The library handles tokenization, data loading, and training loops.

TensorFlow Hub and Keras Applications

TensorFlow Hub offers pre-trained models as reusable modules. Keras Applications provides popular image classification models with pre-trained ImageNet weights. These can be easily integrated into TensorFlow/Keras pipelines for quick experimentation.

PyTorch Image Models (timm)

The timm library provides state-of-the-art image models with pre-trained weights. It includes efficient training scripts, augmentation pipelines, and tools for fine-tuning. The library covers everything from classic ResNets to modern architectures like ConvNeXt and Vision Transformers.

Case Studies and Applications

Medical Imaging

Transfer learning has transformed medical image analysis. Models pre-trained on natural images can be fine-tuned to detect tumors in X-rays, segment organs in CT scans, or classify skin lesions in dermoscopy images. Even with limited labeled medical data, transfer learning achieves clinically useful accuracy.

Document Analysis

BERT and its variants have been fine-tuned for document classification, legal contract analysis, financial report summarization, and customer support ticket routing. A general language model becomes a specialized domain expert through targeted fine-tuning.

Industrial Applications

Manufacturing companies use transfer learning for quality control, detecting defects in products using cameras. Pre-trained vision models are fine-tuned on examples of good and defective products, enabling automated inspection systems that improve over time.

Challenges and Limitations

Negative Transfer

When source and target domains are too different, transfer learning can hurt performance. The pre-trained features may not be relevant or may even be misleading for the new task. Careful evaluation is necessary to ensure transfer learning is actually helping.

Computational Requirements

While transfer learning is more efficient than training from scratch, fine-tuning large models still requires significant computational resources. Techniques like parameter-efficient fine-tuning (LoRA, adapters) can reduce memory and compute requirements while maintaining performance.

Bias and Fairness

Pre-trained models may encode biases present in their training data. When fine-tuning, these biases can transfer to downstream tasks. Careful evaluation for fairness and bias mitigation techniques may be necessary, especially for applications affecting people.

Future Directions

Foundation Models

The trend toward massive pre-trained “foundation models” continues. Models trained on diverse data across modalities (text, images, audio) are becoming starting points for many applications. Understanding how to effectively adapt these models is becoming increasingly important.

Few-shot and Zero-shot Learning

Advanced transfer learning enables learning from very few examples (few-shot) or even no examples (zero-shot). Large language models can perform tasks they were not explicitly trained for through clever prompting, representing an extreme form of transfer learning.

Continual Learning

Research into continual learning aims to enable models to learn new tasks without forgetting old ones. This extension of transfer learning is crucial for real-world systems that must adapt to changing requirements over time.

Conclusion

Transfer learning has democratized AI development, enabling practitioners to build powerful models without massive datasets or compute budgets. By standing on the shoulders of pre-trained models, developers can focus on their specific problems rather than reinventing foundational capabilities. As pre-trained models grow more capable and accessible, transfer learning will remain a cornerstone technique in the AI practitioner’s toolkit, enabling rapid development of specialized AI solutions across every industry.