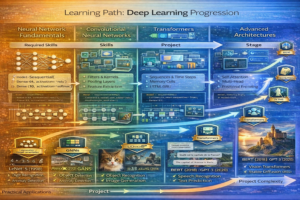

Learning Path: Deep Learning Progression

Duration: 10-12 weeks | Weekly Commitment: 20-25 hours | Prerequisites: Python for AI/ML path or equivalent

Path Overview

Master neural networks from fundamentals to state-of-the-art architectures. This path covers the mathematical foundations, implementation techniques, and practical applications.

Phase 1: Neural Network Fundamentals (Weeks 1-2)

Module 1.1: What are Neural Networks?

- The neuron model and biological inspiration

- Forward propagation (input → output)

- Activation functions (sigmoid, ReLU, tanh)

- Network architecture (layers, neurons)

- Why deep learning works

Module 1.2: Training Neural Networks

- Loss functions (MSE, cross-entropy)

- Gradient descent algorithm

- Backpropagation (chain rule in action)

- Understanding learning rates

- Optimization algorithms (SGD, Adam)

Practice: Implement backpropagation from scratch in NumPy

Phase 2: Deep Learning with TensorFlow/Keras (Weeks 3-5)

Module 2.1: Building Your First Neural Network

- Setting up TensorFlow/Keras environment

- Creating simple neural networks

- Compiling and training models

- Evaluating model performance

- Making predictions on new data

Module 2.2: Handling Real Data

- Data preprocessing and normalization

- Dealing with overfitting (regularization)

- Using validation sets properly

- Early stopping to prevent overfitting

- Dropout and other regularization techniques

Module 2.3: Understanding Layers

- Dense layers (fully connected)

- Different layer types and when to use them

- Batch normalization

- Architecture best practices

Practice Project: Build a classifier for MNIST dataset

Phase 3: Convolutional Neural Networks (Weeks 6-7)

Module 3.1: Convolutions for Images

- How convolution works (visual understanding)

- Filters and feature maps

- Pooling operations

- Building convolutional layers

Module 3.2: Building CNN Models

- Creating CNN architectures

- Classic architectures (LeNet, AlexNet, VGG)

- Transfer learning (using pre-trained models)

- Fine-tuning pre-trained models

Project: Build Image Recognition with CNN project

Phase 4: Recurrent Neural Networks (Weeks 8-9)

Module 4.1: Sequence Modeling

- Why RNNs for sequences (text, time series)

- The RNN architecture

- Vanishing gradient problem

- LSTM (Long Short-Term Memory)

- GRU (Gated Recurrent Unit)

Module 4.2: Applications

- Sequence-to-sequence models

- Machine translation basics

- Text generation

- Time series prediction

Project: Complete Stock Prediction with LSTM project

Phase 5: Modern Architectures & Applications (Weeks 10-12)

Module 5.1: Attention & transformers

- Attention mechanism

- The Transformer architecture

- BERT and other pre-trained transformers

- Using Hugging Face transformers

Module 5.2: Advanced Topics

- Generative models (VAE, GAN basics)

- Reinforcement learning introduction

- Model deployment and optimization

- Staying current with deep learning

Key Projects in This Path

- Week 2: Simple neural network from scratch

- Week 5: MNIST digit classification

- Week 7: Image recognition with CNN

- Week 9: Stock prediction with LSTM

- Week 12: Capstone project combining multiple architectures

Resources

- TensorFlow Official Tutorials

- Fast.ai Deep Learning Course

- Deep Learning Book (Goodfellow, Bengio, Courville)

- Hugging Face Transformers Library

Next Steps

- Specialize in a specific domain (NLP, Computer Vision, etc.)

- Learn AI Tools Mastery for production deployment

- Review AI Researcher career guide for research opportunities

Continue Learning: Related Articles

Create a Resume Parser with NLP: Complete Python Tutorial for Extracting Structured Data

Introduction to AI Resume Parsing

Resume parsing is a fundamental task in HR technology, powering applicant tracking sy…

📖 20 min read

Movie Recommendation System

Hands-On Project: Movie Recommendation System

Difficulty Level: Intermediate | Duration: 3-5 hours | Topics: Collaborati…

📖 7 min read

Structured Learning Paths for AI & ML

Structured Learning Paths for AI & ML

Complete progressions from beginner to advanced in different specializations

…

📖 2 min read

Enterprise AI Chatbot Implementation: Complete Guide to Deployment, Integration, and ROI Optimization

Enterprise AI chatbots handle 2.5 billion customer interactions monthly, reducing support costs by $11 billion annually …

📖 15 min read

💡 Explore 80+ AI implementation guides on Harshith.org